What is a Load Balancer? How does it work?

Efficiently distribution across a group of backend servers.

Efficiently distribution across a group of backend servers.

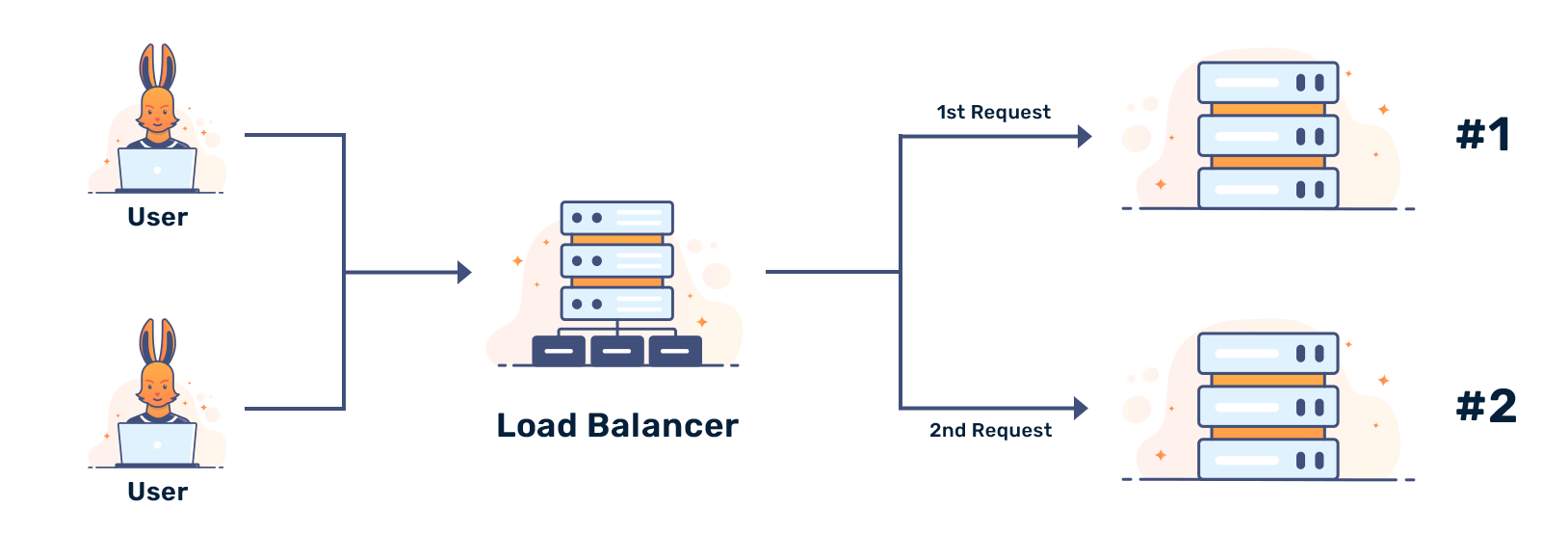

Load balancing is the process of distributing a set of tasks over a set of computing units; in this text, we refer to the computing units as servers while the entities that send tasks are called clients. The aim of load balancing is to increase the total processing capacity of the entire system as perceived by clients without increasing the capacity of any server. The goal of load balancing is therefore to improve resource utilization, facilitate scaling and ensure high availability.

The load-balancing policy describes how tasks are distributed to the servers. While there are many load-balancing policies, we list a few of the most common ones.

Round-Robin distributes incoming tasks to every server in sequence. After all servers have received a task, the process is repeated in the same order. This is often the default policy of load balancers.

Random assignment assigns incoming tasks randomly. There are many variations, such as weighted random allocation which uses weights so that overloaded servers have less chance of being selected while underutilized ones have more.

Least-work (or Least-connections) assigns the incoming task to the server that currently has the lowest load (or the lowest number of active connections).

Hash-based computes a hash over one of client’s properties and then uses the computed value as an index to select the server. If the hash is computed from a value that is constant, this creates a permanent association between the client and the server; all potential subsequent requests from this client will be forwarded to the same server. In such cases we say that the policy is sticky, since it sticks the client to the given server.

A typical example is to use the client’s IP address. First we compute hash over the IP and then based on this value we derive an index that points to one of servers. While this creates a permanent client-server association, it is rather fragile: if a server is added or removed, the association breaks.

There are two ways load-balancers are typically implemented. The first, called layer 4 load-balancing (or L4) works at the segment or datagram level (layer 4 of ISO model), and the second, called layer 7 load-balancing (or L7) works at the application level (layer 7 of ISO model).

Layer 4 load balancing works at the transport layer of the network protocol stack. It functions similarly to a network translation device (NAT). To divert incoming traffic to the allocated server, the L4 load balancer simply changes the destination IP address (and the port number) of the layer 4 packet that contains the task. And similarly, to divert the outgoing traffic back to the requesting client, the balancer changes source IP address (and the port number) of the message containing the response.

Such approach is well suited for network load balancing. It is fast, efficient, secure and often implemented in hardware. Since the load balancing only modifies the layer 4 network packets and does not inspect the content of exchange messages, it is general and applicable to multiple application layer protocols. However, the very same reason (working on layer 4 and not inspecting the content) also limits the load-balancing intelligence and consequently its capabilities.

On a network level, we have a single connection from the client to the server and the load balancer merely relays the traffic. One can imagine the L4 load balancer as a router that directs traffic from the public network, from which clients send requests, onto the private one, on which servers reside, but instead of routing packets based on their address, the packets are routed based on the load-balancing policy.

Layer 7 load balancers work on the application layer of the network protocol stack. They acts on the session contents and effectively behave like proxies. On the network level we have two distinct connections: one from the client to the load balancer and the other from the load balancer to the server that handles the request.

These two connections need not be the same, and they often are not. For instance, the first connection might be secured with TLS while the second might be un-secured (since for instance, it runs over a secure private network). Or the first connection may be over IPv6 while the private network may only use IPv4.

Such load balancing is well suited for server load balancing. Since there are two connections, both involving the load balancer, the latter can read the task contents, analyze, and even modify it. This gives us much more flexibility than using L4 load balancers, for the price of a bit more computing overhead. (With modern hardware this is getting less of an issue.)

One such example is the implementation of the sticky load-balancing policy. Recall, a sticky load-balancing policy creates an association between clients and servers so that the same client is always served by the same server. A typical use case would be an HTTP application that uses session which is stored on the application server (server-side session); if the client switches to another application server, the session data is lost.

The only way L4 load balancer can implement such sticky approach is with a hash-based policy as described above. However, such solution is rather fragile since associations may get broken if the number of application server changes.

But a L7 load balancer can create such association explicitly and manage it actively. Now if the number of application servers changes, the associations remain. (As a side note, nowadays one can design web applications that use stateless HTTP requests in which the authentication is handled with JWT and the session data is saved on the client with cryptographically protected cookies. Such applications do not need the server-side session and consequently the sticky load-balancing policy. But there are other use cases that still require stickiness.)

But load balancers can do even more. For instance, if a server is offline, the load balancer should not forwarded request to it. This happens quite often – in bigger installations it is common to have a number of nodes offline at any time to be either replaced or fixed. The load balancer therefore needs to be aware which services are healthy and which not so it can make the load balancing decision accordingly — that is, to not route traffic to unavailable nodes.

An even more interesting concept enabled by the load-balancer is dynamic service scaling. As the gateway to all services, the load-balancer is always aware of the load on servers. If the current load on all servers is high, the load balancer can ask for additional services to be instantiated. And similarly, if the load is low, the load balancer can save the resources by asking for redundant services to be shut down.

All such requests, made automatically and in real time, allow us to build truly scalable and highly available systems in which load-balancers play an integral part.

Hypertext Transfer Protocol. A protocol that connects web browsers to web servers when they request content.

The layer of the OSI model that handles traffic between hosts and clients (TCP/UDP).

The layer of the OSI model that supports applications and web-pages.